Using Diffrhythm AI as a Songwriting Sketchpad Not a Finished-Mix Machine

There’s a common trap with AI music tools: you either treat them like toys (and dismiss them), or you expect them to replace a full production pipeline (and get disappointed). A more useful middle ground is to treat Diffrhythm AI as a sketchpad—a way to externalize an idea in audio form, so you can evaluate melody, phrasing, pacing, and emotional tone before you invest serious time.

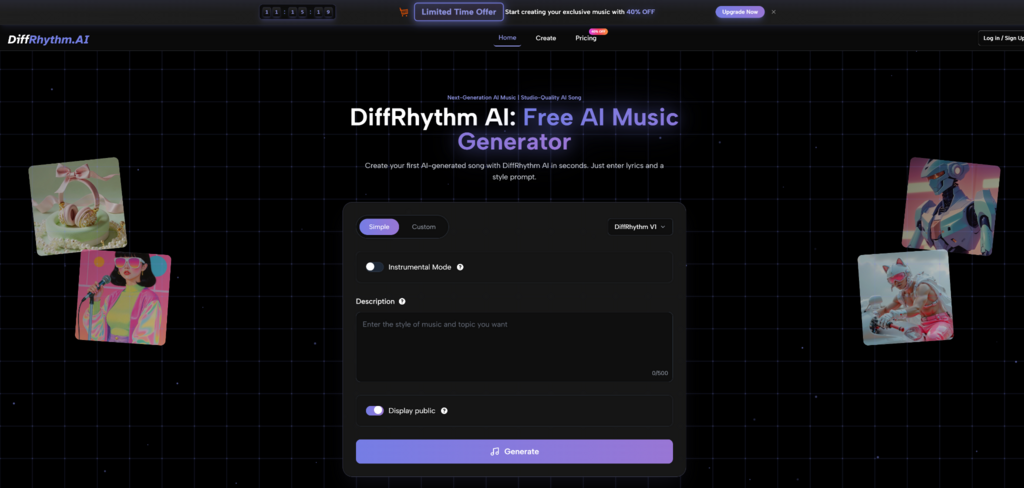

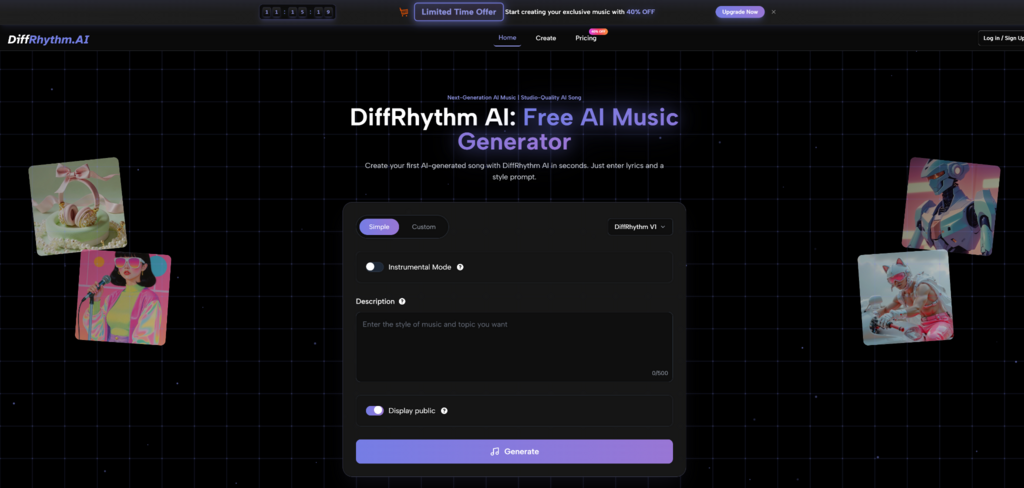

Diffrhythm AI is designed around a specific workflow: lyrics + a style prompt, with the goal of generating a complete song attempt that includes vocals and accompaniment. That is a meaningful difference from tools that mainly excel at loops, textures, or instrumental beds.

What “Sketchpad” Means in Music Terms

A sketch doesn’t need perfect EQ. It needs truth: does the idea work?

With a sketchpad approach, you’re listening for:

- Contour: does the melody move in a way that matches the lyric?

- Prosody: do stressed syllables land where they should?

- Structure: does it feel like verse → chorus, not a wandering loop?

- Energy curve: does it build and release tension?

Diffrhythm AI Music can help you surface these answers quickly—especially if you keep your inputs disciplined.

A Producer-Friendly Way to Prompt Without Fighting the Model

Start with a “production brief” style prompt

Instead of a single-word genre, write a short brief:

- Genre + mood (e.g., “indie rock, nostalgic”)

- Drum character (e.g., “tight kick/snare, moderate swing”)

- Harmonic palette (e.g., “warm guitars, simple chords”)

- Vocal intent (e.g., “intimate, slightly breathy, clear chorus”)

Example prompt:

“alt-pop, bittersweet, punchy drums, warm bass, airy synth pads, clear female vocal, mid-tempo”

Why this helps

The more “musical role” information you provide (drums, bass, pads, vocal tone), the less the model has to infer.

The “Two-Pass” Method: Structure First, Detail Second

Pass 1 — Validate structure

Use short lyrics:

- Verse: 4 lines

- Chorus: 4 lines

Generate 2–3 times. Choose the output where structure feels most coherent.

Pass 2 — Expand lyrics once the skeleton works

Now add:

- A second verse

- A bridge (optional)

- A slightly longer chorus

This approach reduces wasted generations because you’re not trying to solve everything at once.

Comparison Table: Where It Fits in a Real Workflow

| Workflow Stage | Diffrhythm AI | DAW-First Production | Loop/Texture Generators |

| Ideation speed | High (fast song-shaped drafts) | Medium/low (setup + crafting) | High (fast loops) |

| Lyric-to-vocal exploration | Built-in focus | Requires a vocalist or synth-vocal tools | Often not supported |

| Structure (verse/chorus feel) | Attempts full form | Fully controllable | Usually loop-oriented |

| Fine control (mix, arrangement) | Limited | Full | Medium |

| Best role | Sketching and auditioning concepts | Final production and polish | Background beds and layers |

This framing is the healthiest way to use it: ideation and concept selection, then move into your deeper tools when needed.

How to Judge Outputs Like a Producer (Without Over-Expecting)

Listen for “musical decisions,” not “audio quality”

Even if the mix isn’t perfect, you can still evaluate:

- Whether the chorus lifts

- Whether the vocal phrasing supports the lyric

- Whether the harmonic movement matches the mood

A helpful checklist

- Does the hook feel like a hook?

- Does the vocal line respect syllable stress?

- Does the instrumental arrangement leave space for the voice?

- Does the energy curve make sense?

If you get “yes” on 2–3 of these, the idea may be worth taking further.

A “Before vs After” Bridge for Songwriters

Before

Your lyric exists as text. The melody is implied, not tested. You can’t reliably tell whether the phrasing sings well until you record a demo.

After

You can audition melodic interpretations quickly:

- Try the lyric as a ballad, then as upbeat pop.

- Notice what emotional frame the words naturally prefer.

- Identify weak lines that don’t sing well (and rewrite them).

This is less about outsourcing creativity and more about getting feedback from sound.

Limitations That Actually Matter

A realistic view makes the tool more credible.

1) Variability is the default

Even with the same input, different generations can diverge. That’s not always a flaw—it can be a feature—but it means you should expect to curate.

2) Complex diction may reduce clarity

Names, technical terms, and dense consonants can cause vocal articulation to feel less natural.

3) Genre edges can be less stable

If you ask for very specific subgenres or unconventional instrumentation, the output may drift toward more common patterns.

H5: Mitigation strategy

H6: Simplify and constrain

- Use shorter lines.

- Avoid ultra-specific niche tags; describe instruments instead.

- Add punctuation to guide phrasing.

Staying Grounded on Legal and Cultural Context

AI music is not just a technical topic; it’s also becoming a policy topic. If you publish widely, it’s worth keeping an eye on neutral references that discuss how authorship, training, and licensing are being debated. One accessible place to start is the U.S. Copyright Office’s public AI materials: https://www.copyright.gov/ai/

The takeaway isn’t fear—it’s awareness. Treat publishing norms and licensing expectations as evolving.

Who This Approach Works For

Diffrhythm AI is a strong fit if you want

- Fast drafts of lyrical ideas.

- A way to audition melody and structure without recording.

- Multiple “interpretations” of the same lyric to choose from.

It’s less ideal if you need

- Full mix control and consistent stems every time.

- Perfect vocal nuance with zero iteration.

- Highly specific production fingerprints on the first try.

Closing Thought

Diffrhythm AI becomes most valuable when you stop asking it to be a finished-mix replacement and start using it as a songwriting mirror: a fast way to hear what your lyric could become. If you iterate thoughtfully—short lyrics first, clear style briefs, multiple generations to curate—you can turn “text on a page” into something you can actually evaluate, refine, and eventually produce with confidence.